Welcome back! This is the 3rd post in a series of posts focusing on performance monitoring for Analysis Services.

- Performance Monitoring for SSAS – Intro

- Performance Monitoring for SSAS – Data Collection

- Performance Monitoring for SSAS – Extended Events Cheat Sheet

- Performance Monitoring for SSAS – Perfmon Counter Cheat Sheet

- Performance Monitoring for SSAS – Extracting Information

In this third post, we’re going to run through the list of Extended Events that you’ll want to collect along with an explanation on the type of performance-related information you can derive from them.

Extended Events (xEvents)

xEvents is the utility used see what’s happening on the server – when did it start, how long did it take, what else was going on at the same time. From a performance perspective it’s only going to be able to show you what was slow and when it was slow – which leaves the million dollar question unanswered: why was it slow? Don’t worry, we’ll get to that in a minute. For now, let’s stick to the plan and cover the xEvents you should be capturing…

Command Events: Command End

Commands events fire whenever structures in an Analysis Services database (e.g. dimensions, measure groups, partitions, etc) are processed, created, modified, or deleted. They are also fired during maintenance-related activity such as backups and restores. Including the Command End event in performance monitoring related traces is a good idea because it provides context for several major types of activity that can negatively impact an Analysis Services query or processing workload. For example, running MDX/DAX queries while the cube/tabular model is being processed or backed up is going to result in a slower response time.

Note: It is not necessary to include the Command Begin event as all relevant information (mainly the start time of the command) can be derived from the corresponding Command End event. The same logic applies to several other events including Progress Report Begin and Query Begin events.

Progress Reports: Progress Report End

This event is very similar to the Command End event (above) but provides a lot more detail. For example, when it comes to processing, the Command End event will only be able to tell you the start time, end time, and duration of a processing command. However, the Progress Report End events will tell you the start time, end time, and duration for each of the major components (e.g. dimensions, measure groups, partitions, etc) down to the various phases of processing for each major component (e.g. Execute SQL, Read Data, Write Data, Build Decode, Build Index, Compress Segment, etc).

Note: Technically you could use Progress Report End events to derive most of the information obtained from Command End events, but the overhead of collecting Command End events is about as close to zero as you can get and its much easier to process and analyze.

In addition to all the juicy information related to processing workloads, this event can also be used to gather detailed information (e.g. start time, end time, and duration) related to query workloads such as partition scans and aggregation-hits. It even provides the SQL used to retrieve data from the source for queries that hit ROLAP partitions.

Queries Events: Query End

This event provides the start time, end time, duration, cpu time, user name, and MDX/DAX text associated with every single query executed against the Analysis Services instance. It will also provide the application associated with the connection (if a value is being specified by the application). If you were stuck on a deserted island and could only take a single extended event…this would be the one.

Note: If your users are connecting to an Analysis Services database (Multidimensional or Tabular) via the Power BI Enterpise Gateway, you will want to include the [Query Begin] event in order to obtain the actual end-user associated with the query. The [Query End] event only shows the (instance-level admin) account used during the PBI Enterprise Gateway setup.

Query Processing Events: Calculate Non Empty End

This event is fired anytime empty tuples are eliminated from a set (e.g. via use of NON EMPTY, NonEmpty, AutoExists, Exists, Existing) during query resolution. Depending on the complexity of the calculation(s) involved, this type of expression can be evaluated in either bulk mode or cell-by-cell mode. Bottom line, bulk mode is strongly preferred over cell-by-cell mode as the latter can be the source of severe performance problems. Fortunately, when capturing this event, we can determine the evaluation mode by looking at the integer data column in the trace.

From Identifying and Resolving MDX Query Performance Bottlenecks in SQL Server 2005 Analysis Services: The Integer column for these events can report six different values. A value of 1, 2 or 3 indicates that the formula engine used a fast NON EMPTY code path (1 for dimension autoexist, 2 for Exists, and 3 for all other NON EMPTY operations). A value of 11, 12, or 13 indicates that the formula engine used a slow NON EMPTY code path (11 for dimension autoexist, 12 for Exists, and 13 for all other NON EMPTY operations). The Duration column for the Calculate Non Empty End event reports the time in milliseconds required to perform the NON EMPTY operation and the CPU Time column reports the processor resources required.

Note: Chris Webb has a nice series of posts covering this event with examples: start here.

Query Processing Events: Query Subcube

This event provides additional information about queries executed against a multidimensional Analysis Services instance such as the number of storage engine requests (aka “Subcubes” or “Subcube requests”) and whether they were satisfied by the (SE) cache or had to go all the way to disk and retrieve the data from an aggregation or base partition.

Any time a query has to go to disk, the performance is going to be worse than if the data was already available in the cache. So if you notice a difference in performance for multiple executions of the same query the reason might be due to differences in the state of the cache at the time each query was executed. This can be come a nasty problem if you’ve been relying on a cache-warming strategy to get the performance your users demand.

Note: as a bonus, the details of this event can be used to populate the OLAPQueryLog table used to perform Usage Based Optimization. Therefore you can reduce the overhead on your Analysis Services instance by disabling the OLAPQueryLog instance-level property as its no longer needed since you’ll already be capturing the data in your performance monitoring solution.

Query Processing Events: VertiPaq SE Query End and VertiPaq SE Query Cache Match

These 2 events are the tabular-equivalent of the single Query Subcube event for multidimensional (above). Together, they provide details on the number of storage engine requests (aka “SE Queries” or “VertiPaq Queries”) and whether they were satisfied by cache or had to be pulled from disk memory.

When using the in-memory mode for tabular (which is most often the case), a vertipaq scan is typically not going to a performance problem. However, some queries will generate a lot (possibly 100’s) of vertipaq scans – these are referred to as “chatty” queries. With 600 vertipaq scans, it doesn’t matter if the average duration is only 20ms…you’re still looking at a 10+ second query.

Query Processing Events: Direct Query End

This event captures information (e.g. SQL text, duration, etc) about the SQL query generated for tabular models configured to run in direct query mode.

Query Processing Events: Resource Usage

This event contains detailed information about storage engine activity – most of which can be derived from the [Query Subcube] or [VertiPaq SE Query End] events. However, there is one piece of information this event provides which is the number of rows scanned for a query. This is helpful with performance tuning activities as adjustments to the data model, calculated measures, and/or query text (MDX/DAX) can result in reducing the number of rows scanned which is correlated (not always, but most of the time) with faster/better query performance. Additionally, it’s sometimes interesting/helpful to review the rows scanned for all executions of a single query.

For more information, check out these 2 posts by Chris Webb: here and here.

Note: the value for rows scanned is the total for the entire query – not per storage engine request. If query execution results in multiple SE requests then this value contains the sum of all rows scanned. Similarly, if all SE requests are satisfied by cache then you will see 0 rows scanned.

These next 2 events are related to capturing error information – which you should be doing – and can help uncover issues such as file corruption or “duplicate attribute key” errors during processing.

Errors and Warnings Events: Error

Progress Reports: Progress Report Error

Finally a quick comment on a new event that only applies to Analysis Services Tabular instances.

Query Processing Events: Dax Query Plan

This event captures the logical and physical query plan for DAX queries. Thanks in large part to the work of Marco and Alberto, the rest of the community (myself included) is now able to extract helpful information from these events. However, it would be ill-advised to include this event in a performance monitoring trace (i.e. one that will be left running 24/7) on a busy system as the data returned can be quite large. When working w/ a client last year, we had a 7 line DAX query that returned plans with several thousand lines of text. A more pragmatic approach would be to capture this event only under special circumstances (e.g. performance tuning a single query).

Note: this event is missing from the Technet documentation – nevertheless, I assure you its available 😉

Conclusion

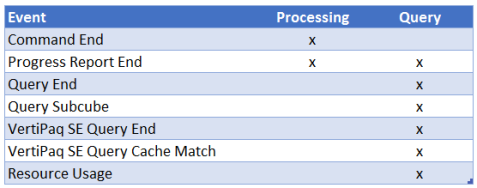

In this post we ran through the list of Extended Events that you’ll want to collect along with an explanation on the type of performance-related information you can derive from them. Depending on the type of instance you’re monitoring, we’re only really only talking about 5 (multidimensional) or 6 (tabular) events that need to be captured.

Here’s a table to help you keep it straight…

And yes, there’s a bit more complexity once you start breaking down these events into subevents – but don’t worry we’ll get to that soon enough. For now, whenever you have some free time, start playing around (in your NON-PRODUCTION environment) with extended events traces.

Here are 2 links to help you get started: one, two

Next post will be very similar to this post except we’re going to cover Perform counters. After that we’ll finally be ready to do some really cool stuff which involves correlating xevent data with perfmon data which will help us answer that million dollar question: Why?!

If you have any questions, please feel free to ask in the comments.

Additional References

- Analysis Services MOLAP Performance Guide for SQL Server 2012 and 2014

- Performance Tuning of Tabular Models in SQL Server 2012 Analysis Services

- Identifying and Resolving MDX Query Performance Bottlenecks in SQL Server 2005 Analysis Services

- Using and Understanding DAX Query Plans

- Expert Cube Development with SSAS Multidimensional Models

6 replies on “Performance Monitoring for SSAS – Extended Events Cheat Sheet”

[…] Bill Anton has a cheat sheet for SSAS performance monitoring extended events: […]

LikeLike

[…] collect and store performance counters and SSAS logs out there, and I suggest you look into this, this or that, if you need […]

LikeLike

[…] Performance Monitoring for SSAS – Extended Events Cheat Sheet […]

LikeLike

[…] Performance Monitoring for SSAS – Extended Events Cheat Sheet […]

LikeLike

[…] written about extended events for Analysis Services quite a bit (here, here, here, here, here) because I think they’re super helpful and very important in […]

LikeLike

[…] Performance Monitoring for SSAS – Extended Events Cheat Sheet […]

LikeLike